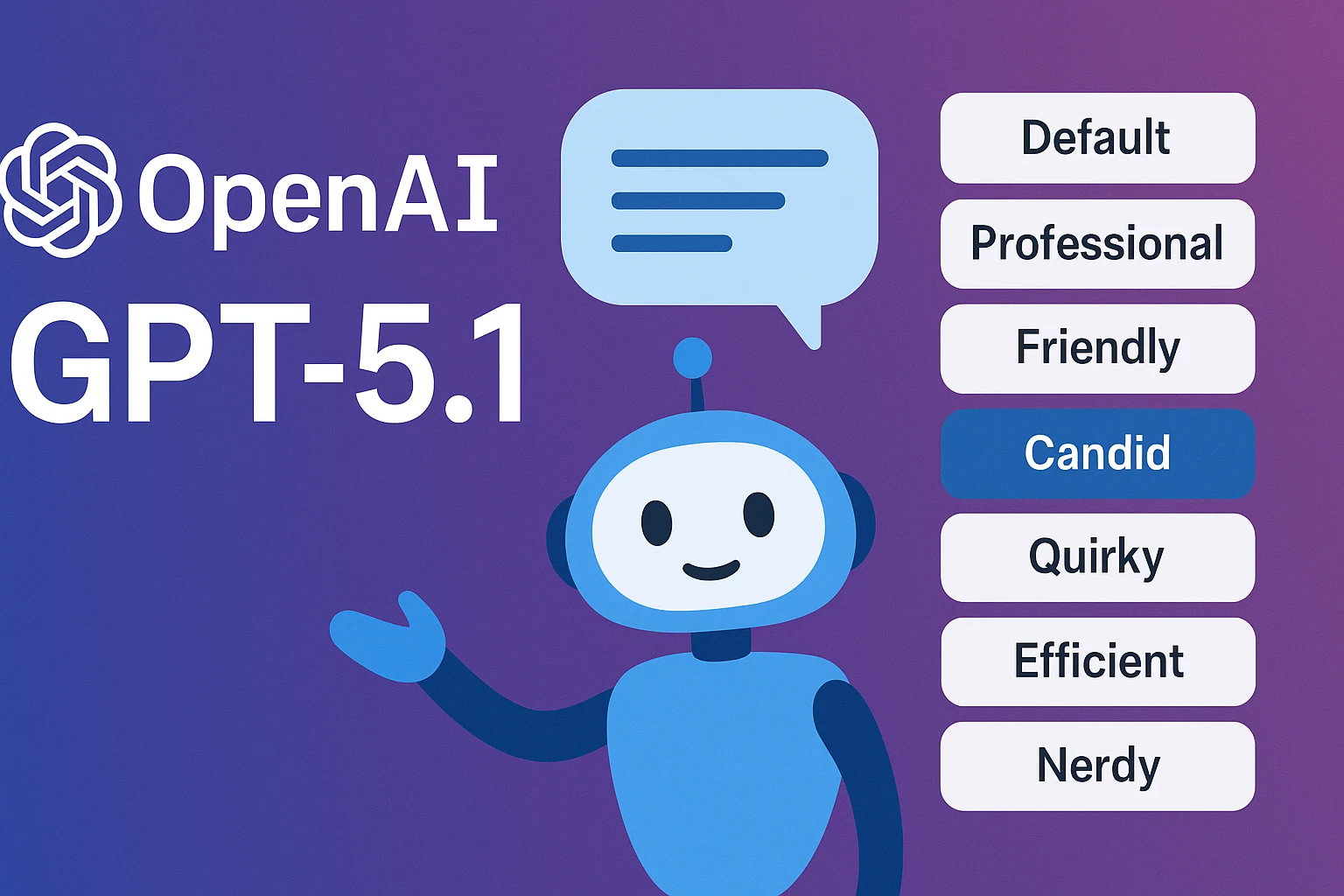

OpenAI’s Bold Move: Eight New ChatGPT Personalities Lift GPT-5.1 into Uncharted Territory

OpenAI’s Bold Move: Eight New ChatGPT Personalities Lift GPT-5.1 into

Uncharted Territory

In a major update that blends technological refinement with user-centric flair, OpenAI has unveiled GPT‑5.1, a

variant of its flagship model designed to strike a balance between raw capability and conversational nuance Announced on November 12, 2025, the release brings two new model variants and eight distinct personality presets, setting the stage for a more tailored, emotionally resonant chat experience. The Verge+2Ars Technica+2

What’s new in GPT-5.1?

Two variants: GPT-5.1 Instant (optimized for warmth, instruction-following, and faster turnaround) and GPT-5.1 Thinking (targeted at more complex reasoning tasks and persistence). The Verge+1

Eight personality styles built into the interface: Default, Professional, Friendly, Candid, Quirky, Efficient, Nerdy, and Cynical. These presets offer users the ability to pick (or fine-tune) how the model behaves conversationally. The Verge+1

A response to feedback: Following mixed reviews of its predecessor (GPT‑5) — which some users described as “cold” or overly technical — OpenAI appears to be trying to reintroduce the human-adjacent presence that people felt was missing. Medium

Why this matters

User experience is at the forefront. For many users, interacting with an AI isn’t solely about accuracy or speed — it’s also about tone, style, personality. OpenAI now explicitly acknowledges this by letting users choose the tone of engagement.

Flexibility vs. coherence trade-off. By adding personalities, OpenAI gives users more choice — but that also increases risk of inconsistent behavior, aliasing (the model switching styles mid-conversation), or users picking a tone that weakens performance.

Brand positioning & competitive differentiation. With rivals such as Anthropic and Google DeepMind pushing hard, providing not just capability but customizable feel becomes a differentiator.

Ethical & safety implications. The more personality variation, the more subtle the alignment challenge becomes: ensuring the “Cynical” persona, for example, doesn’t become unhelpfully adversarial or sub-optimally safe.

The tightrope OpenAI is walking

OpenAI is navigating three intertwined pressures:

Capability expectation vs. actual leaps. GPT-5 was supposed to be a big step; some users felt it was incremental or even disruptive to the previous experience. Medium+1

Consistency vs. personalization. Giving users more personality options runs the risk of fragmenting the behavior of the system and making it harder to guarantee performance or safety across variants.

Engagement vs. manipulation. More conversational warmth or quirky tones may boost engagement, but there is a danger: if the AI feels “too human”, users may over-trust it or misunderstand its limitations.

What users and developers should watch

Pick the right tone for the task. For professional work (e.g., drafting reports, business messaging), the “Professional” or “Efficient” personalities may be most appropriate. For brainstorming or creative dialogue, “Quirky” or “Friendly” might bring more value.

Test for domain strength. Even if you change the style, productivity in domains like code, math, reasoning should be assessed — does a “Cynical” persona slow down correctness?

Monitor for drift. When you enable personality presets, have safeguards: does the model stay on-task or does the conversational style pull it off rails?

Consider alignment/safety layers. With personalities, it’s more important than ever to verify outputs, guard against overly casual responses in serious domains (e.g., legal/medical), and ensure the tone doesn’t compromise accuracy.

Developer integrations. If you’re building apps on the OpenAI API, these personalities open new UX possibilities: you could map personas to audience segments, or allow end-users to switch tone dynamically. But you’ll need to handle fallback logic, style transfer and consistency.

Looking ahead: What this signals for AI’s future

Personalization becomes table stakes. As models improve, the next frontier isn't just “more accurate”—it’s “more you”. Personality, adaptation, style memories—all matter.

Platformization of voice/tone. Just as brands pick fonts & colors, we’ll see apps pick “model voices”. One size no longer fits all.

Risk of divergence. As each persona becomes a branch, ensuring baseline performance, fairness, and safety becomes harder. We may see growing efforts around certification or “safe style” regimes.

Human-AI relationship dynamics evolve. With richer conversational styles, users may feel more affinity or emotional connection to the model, which raises questions about transparency (does it know you?), boundaries (what feelings are okay to mimic?), and trust (when does style trump substance?).

Final word

GPT-5.1’s rollout of eight new personality presets is more than a cosmetic update—it signals a shift in how AI is packaged: from a generic “smart assistant” to a customizable conversational partner. OpenAI is not only trying to right the user-experience ship after some criticism of earlier models but also hedge its stake in an evolving AI market where tone, feel and context matter almost as much as raw horsepower. That said, success will depend not just on delivering novel voices but on ensuring each persona still meets the rigorous standards of accuracy, safety and utility.